Climate Friendly Kubernetes-Native GPU Provisioning from Amsterdam

Deploy your AI service with standard Kubernetes manifests.

By

Published on

Your inference pipeline needs GPUs. Your team already runs everything in Kubernetes—but adding GPU compute shouldn’t mean choosing between vendor lock-in and operational overhead. Especially when compliance requirements demand European data residency.

Here’s a straightforward GPU provisioning on Dutch infrastructure.

Finding GPUs you can use

For European research institutions and government agencies, relying on US-controlled infrastructure isn’t just a technical concern—it’s increasingly a question of institutional autonomy and long-term strategic independence.

The alternative—provisioning GPU instances yourself and integrating them with your clusters—works, but now you’re maintaining infrastructure that constantly needs attention instead of building your product.

GPU compute should work like any other Kubernetes resource: straightforward provisioning, standard orchestration, no architectural compromises. That’s what Gardener-managed Kubernetes on Dutch infrastructure provides.

How Gardener-Managed Kubernetes Works

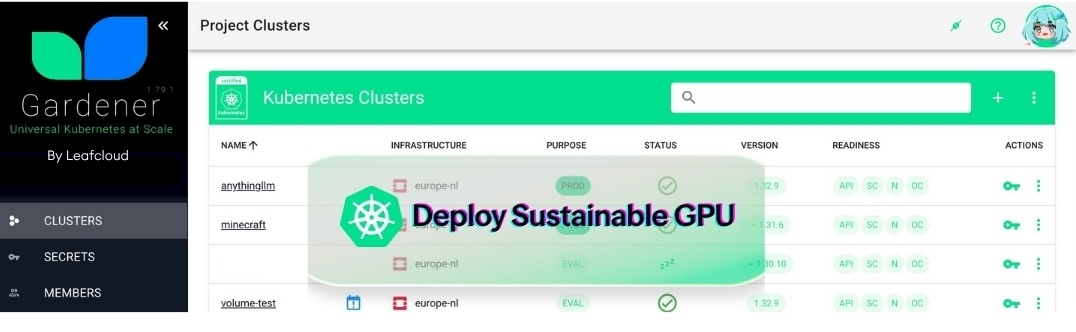

Leafcloud uses Gardener—open-source Kubernetes management from SAP—to provision GPU-enabled clusters. Standard infrastructure designed for multi-cloud environments.

Create a cluster through the Gardener dashboard. Select GPU-enabled worker nodes: A30, A100, H100, or upcoming Blackwell instances. Gardener provisions the cluster on OpenStack. Takes a few minutes.

Install the NVIDIA GPU Operator via Helm:

kubectl create ns gpu-operator

kubectl label --overwrite ns gpu-operator pod-security.kubernetes.io/enforce=privileged

helm install --wait --generate-name -n gpu-operator --create-namespace nvidia/gpu-operator --set toolkit.enabled=trueThe operator detects GPUs, installs drivers and CUDA toolkit automatically. Check it worked:

kubectl describe nodesYou’ll see GPU resources listed: nvidia.com/gpu.count, nvidia.com/gpu.product, memory allocation.

Deploy your AI service with standard Kubernetes manifests. Add GPU resource requests to pod specs. Kubernetes schedules pods on GPU nodes. HPA scales up when traffic increases, down when it drops.

Your deployment configs are portable YAML. No proprietary annotations. No vendor-specific quirks. Standard Kubernetes on OpenStack means your infrastructure adapts without rewriting code—particularly valuable for research projects where funding sources and compliance requirements shift over time.

Coming Soon: NVIDIA Blackwell RTX PRO 6000 on Leafcloud

Next-generation GPU compute for AI inference, media processing, and accelerated analytics—available on European, climate-positive infrastructure. Learn more about Blackwell availability →

Why Dutch Infrastructure Changes the Equation

Where your GPUs physically run matters.

European operational control: GDPR compliance means keeping research data, patient information, or citizen data within EU borders. Leafcloud’s Dutch infrastructure handles this because the servers operate under European jurisdictionand is ISO27001 and SOC2-type2 Certified. For research institutes and government agencies, there’s also the question of who controls access to your infrastructure—concerns about foreign government access provisions, unexpected policy changes, or which legal framework governs your research computing.

Heat reuse, not waste: Leafcloud places servers in existing buildings with central heating systems. The heat generated by your inference workload provides free hot showers to residents of nursing homes and apartment blocks in Amsterdam. Your GPU compute actively contributes to local energy systems—85% thermal reuse—rather than venting waste heat into the atmosphere.

Vendor neutrality: OpenStack foundation. Gardener management. Standard Kubernetes. No proprietary APIs locking you in. No credit systems forcing you deeper into one vendor’s platform.

What This Actually Looks Like

You’re deploying an AI service that needs GPU inference.

Log into the Gardener dashboard at dashboard.gardener.leaf.cloud. Create a cluster—name it, keep infrastructure and DNS defaults. Configure worker nodes: select GPU machine type (A30, A100, H100, or Blackwell). Click create. Wait a few minutes.

Install NVIDIA GPU Operator via Helm. Check status with kubectl get pods -n gpu-operator. When pods deploy, run kubectl describe nodes. GPU resources appear in node labels and resource sections.

Deploy your service. Standard Kubernetes manifests with GPU resource requests in pod specs. Kubernetes schedules pods on GPU nodes. HPA scales with traffic.

Data stays in Europe. The heat generated by your inference workload provides free hot showers to residents of nursing homes and apartment blocks. Your configs are portable YAML.

No separate GPU console. No architectural compromises.

Start Building

Gardener-managed Kubernetes with GPU support is available now. A30, A100, and H100 instances ready for research workloads, government AI projects, and university compute needs. Blackwell coming soon.

Create an account or schedule a call to discuss your requirements.

Related: